Screaming Frog SEO Spider 13.0 MultiOS

Screaming Frog SEO Spider 13.0 MultiOS

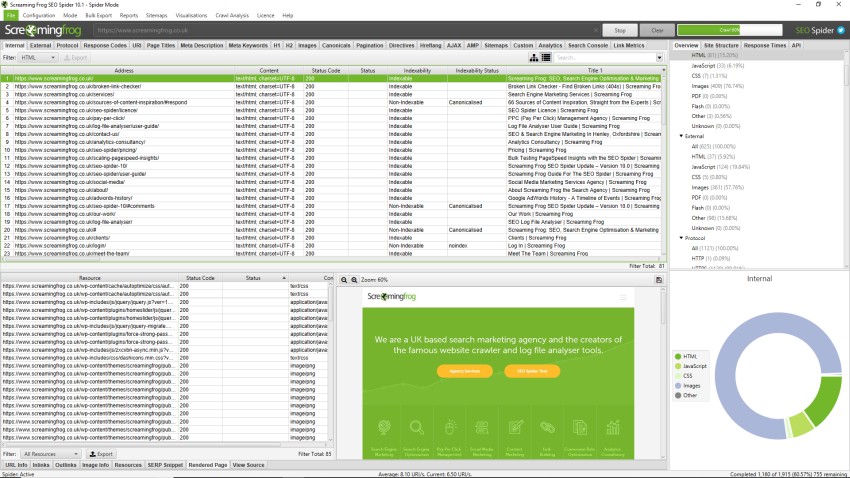

The Screaming Frog SEO Spider is a website crawler that helps you improve onsite SEO, by extracting data & auditing for common SEO issues. Download & crawl 500 URLs for free, or buy a licence to remove the limit & access advanced features.

What can you do with the SEO Spider Tool?

The SEO Spider is a powerful and flexible site crawler, able to crawl both small and very large websites efficiently, while allowing you to analyse the results in real-time. It gathers key onsite data to allow SEOs to make informed decisions.

Find Broken Links

Crawl a website instantly and find broken links (404s) and server errors. Bulk export the errors and source URLs to fix, or send to a developer.

Analyse Page Titles & Meta Data

Analyse page titles and meta descriptions during a crawl and identify those that are too long, short, missing, or duplicated across your site.

Extract Data with XPath

Collect any data from the HTML of a web page using CSS Path, XPath or regex. This might include social meta tags, additional headings, prices, SKUs or more!

Generate XML Sitemaps

Quickly create XML Sitemaps and Image XML Sitemaps, with advanced configuration over URLs to include, last modified, priority and change frequency.

Crawl jаvascript Websites

Render web pages using the integrated Chromium WRS to crawl dynamic, jаvascript rich websites and frameworks, such as Angular, React and Vue.js.

Audit Redirects

Find temporary and permanent redirects, identify redirect chains and loops, or upload a list of URLs to audit in a site migration.

Discover Duplicate Content

Discover exact duplicate URLs with an md5 algorithmic check, partially duplicated elements such as page titles, descriptions or headings and find low content pages.

Review Robots & Directives

View URLs blocked by robots.txt, meta robots or X-Robots-Tag directives such as ‘noindex’ or ‘nofollow’, as well as canonicals and rel=“next” and rel=“prev”.

Integrate with GA, GSC & PSI

Connect to the Google Analytics, Search Console and PageSpeed Insights APIs and fetch user and performance data for all URLs in a crawl for greater insight.

Visualise Site Architecture

Evaluate internal linking and URL structure using interactive crawl and directory force-directed diagrams and tree graph site visualisations.

The SEO Spider Tool Crawls & Reports On...

The Screaming Frog SEO Spider is an SEO auditing tool, built by real SEOs with thousands of users worldwide. A quick summary of some of the data collected in a crawl include -

Errors – Client errors such as broken links & server errors (No responses, 4XX client & 5XX server errors).

Redirects – Permanent, temporary, jаvascript redirects & meta refreshes.

Blocked URLs – View & audit URLs disallowed by the robots.txt protocol.

Blocked Resources – View & audit blocked resources in rendering mode.

External Links – View all external links, their status codes and source pages.

Security – Discover insecure pages, mixed content, insecure forms, missing security headers and more.

URI Issues – Non ASCII characters, underscores, uppercase characters, parameters, or long URLs.

Duplicate Pages – Discover exact and near duplicate pages using advanced algorithmic checks.

Page Titles – Missing, duplicate, long, short or multiple title elements.

Meta Description – Missing, duplicate, long, short or multiple descriptions.

Meta Keywords – Mainly for reference or regional search engines, as they are not used by Google, Bing or Yahoo.

File Size – Size of URLs & Images.

Response Time – View how long pages take to respond to requests.

Last-Modified Header – View the last modified date in the HTTP header.

Crawl Depth – View how deep a URL is within a website’s architecture.

Word Count – Analyse the number of words on every page.

H1 – Missing, duplicate, long, short or multiple headings.

H2 – Missing, duplicate, long, short or multiple headings

Meta Robots – Index, noindex, follow, nofollow, noarchive, nosnippet etc.

Meta Refresh – Including target page and time delay.

Canonicals – Link elements & canonical HTTP headers.

X-Robots-Tag – See directives issued via the HTTP Headder.

Pagination – View rel=“next” and rel=“prev” attributes.

Follow & Nofollow – View meta nofollow, and nofollow link attributes.

Redirect Chains – Discover redirect chains and loops.

hreflang Attributes – Audit missing confirmation links, inconsistent & incorrect languages codes, non canonical hreflang and more.

Inlinks – View all pages linking to a URL, the anchor text and whether the link is follow or nofollow.

Outlinks – View all pages a URL links out to, as well as resources.

Anchor Text – All link text. Alt text from images with links.

Rendering – Crawl jаvascript frameworks like AngularJS and React, by crawling the rendered HTML after jаvascript has executed.

AJAX – Select to obey Google’s now deprecated AJAX Crawling Scheme.

Images – All URLs with the image link & all images from a given page. Images over 100kb, missing alt text, alt text over 100 characters.

User-Agent Switcher – Crawl as Googlebot, Bingbot, Yahoo! Slurp, mobile user-agents or your own custom UA.

Custom HTTP Headers – Supply any header value in a request, from Accept-Language to cookie.

Custom Source Code Search – Find anything you want in the source code of a website! Whether that’s Google Analytics code, specific text, or code etc.

Custom Extraction – Scrape any data from the HTML of a URL using XPath, CSS Path selectors or regex.

Google Analytics Integration – Connect to the Google Analytics API and pull in user and conversion data directly during a crawl.

Google Search Console Integration – Connect to the Google Search Analytics API and collect impression, click and average position data against URLs.

PageSpeed Insights Integration – Connect to the PSI API for Lighthouse metrics, speed opportunities, diagnostics and Chrome User Experience Report (CrUX) data at scale.

External Link Metrics – Pull external link metrics from Majestic, Ahrefs and Moz APIs into a crawl to perform content audits or profile links.

XML Sitemap Generation – Create an XML sitemap and an image sitemap using the SEO spider.

Custom robots.txt – Download, edit and test a site’s robots.txt using the new custom robots.txt.

Rendered Screen Shots – Fetch, view and analyse the rendered pages crawled.

Store & View HTML & Rendered HTML – Essential for analysing the DOM.

AMP Crawling & Validation – Crawl AMP URLs and validate them, using the official integrated AMP Validator.

XML Sitemap Analysis – Crawl an XML Sitemap independently or part of a crawl, to find missing, non-indexable and orphan pages.

Visualisations – Analyse the internal linking and URL structure of the website, using the crawl and directory tree force-directed diagrams and tree graphs.

Structured Data & Validation – Extract & validate structured data against Schema.org specifications and Google search features.

Spelling & Grammar – Spell & grammar check your website in over 25 different languages.

Only for V.I.P

Only for V.I.P

Warning! You are not allowed to view this text.